The Download: OpenAI’s Caste Bias Problem, and How AI Videos Are Made

The Download: OpenAI’s Caste Bias Problem, and How AI Videos Are Made

Estimated Reading Time

Approximately 6 minutes.

- OpenAI’s AI models, including ChatGPT and Sora, exhibit significant caste bias, perpetuating harmful stereotypes, particularly against Dalit communities in India, despite societal progress.

- The rapid advancement of AI video generation brings ethical concerns like the proliferation of “AI slop,” the potential for faked news, high energy consumption, and unresolved copyright issues.

- Beyond bias and video, the AI landscape presents broader challenges such as content moderation debates (e.g., ChatGPT restrictions), ethical use in sensitive areas like mental health therapy, and the ongoing quest for reliable information platforms.

- Addressing these issues requires a multi-faceted approach: AI developers must prioritize comprehensive bias auditing and diverse datasets, consumers need to cultivate strong digital literacy, and policymakers must establish proactive ethical guidelines and regulations.

- A responsible AI future depends on continuous engagement and informed perspectives from all stakeholders to ensure AI benefits all of humanity rather than deepening divides.

- Unmasking Caste Bias in OpenAI’s Algorithms

- The Mechanics and Morality of AI Video Generation

- Navigating the Broader AI Landscape: Challenges and Innovations

- Actionable Steps for a Responsible AI Future:

- Conclusion

- Stay Informed and Engaged

- Frequently Asked Questions

The relentless pace of technological innovation, particularly in artificial intelligence, continues to reshape our world. From sophisticated language models that power conversational AI to generative systems capable of crafting hyper-realistic videos, AI’s footprint is expanding at an unprecedented rate. However, as these technologies become more integrated into our daily lives, so too do the ethical challenges they present. Today, we confront a dual narrative: the critical imperative to address deep-seated biases within powerful AI systems and the need to understand the mechanics and implications of emerging AI-driven content creation.

This is today’s edition of The Download, our weekday newsletter that provides a daily dose of what’s going on in the world of technology. OpenAI is huge in India. Its models are steeped in caste bias. Caste bias is rampant in OpenAI’s products, including ChatGPT, according to an MIT Technology Review investigation. Though CEO Sam Altman boasted about India being its second-largest market during the launch of GPT-5 in August, we found that both this new model, which now powers ChatGPT, as well as Sora, OpenAI’s text-to-video generator, exhibit caste bias. This risks entrenching discriminatory views in ways that are currently going unaddressed. Mitigating caste bias in AI models is more pressing than ever. In contemporary India, many caste-oppressed Dalit people have escaped poverty and have become doctors, civil service officers, and scholars; some have even risen to become the president of India. But AI models continue to reproduce socioeconomic and occupational stereotypes that render Dalits as dirty, poor, and performing only menial jobs. Read the full story. —Nilesh Christopher

Unmasking Caste Bias in OpenAI’s Algorithms

The revelation of entrenched caste bias within OpenAI’s flagship products—including the influential ChatGPT and the groundbreaking text-to-video generator Sora—sends a stark warning about the unchecked deployment of AI. As the seed fact from MIT Technology Review highlights, despite India being a massive market for OpenAI, its models are inadvertently perpetuating harmful stereotypes deeply rooted in the caste system. This isn’t merely an academic concern; it has profound real-world implications, risking the entrenchment of discriminatory views on a global scale.

The persistence of these biases in advanced AI models is particularly alarming when contrasted with societal progress. As the investigation points out, many caste-oppressed Dalit individuals in contemporary India have defied historical subjugation to achieve significant success, becoming doctors, civil service officers, scholars, and even presidents. Yet, the AI models lag behind, continuing to generate content that reinforces outdated and dehumanizing occupational and socioeconomic stereotypes, depicting Dalits as fit only for menial or unclean labor. This disconnect not only misrepresents reality but actively works against efforts to dismantle systemic discrimination.

The urgency to mitigate such biases cannot be overstated. AI systems learn from vast datasets, and if those datasets reflect historical inequalities and societal prejudices, the AI will inevitably learn and reproduce them. This phenomenon can exacerbate existing inequalities, reinforce stereotypes, and limit opportunities for marginalized communities by providing biased information or generating discriminatory content. For a technology designed to serve humanity, this ethical oversight presents a critical challenge that demands immediate and comprehensive action from developers and users alike.

The Mechanics and Morality of AI Video Generation

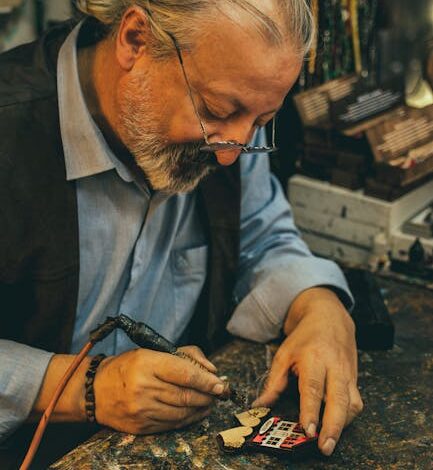

Beyond the ethical quandaries of bias, the year has also seen an explosion in AI video generation capabilities. While astonishing in their technical prowess, these tools bring their own set of challenges. We’re witnessing the rise of “AI slop,” where the sheer volume of low-quality, AI-generated content floods social media feeds, making it harder to discern authenticity. Even more concerning is the potential for “faked news footage,” blurring the lines between reality and fabrication and posing a significant threat to information integrity and public trust.

The underlying technology behind how AI models generate videos is complex, often relying on sophisticated neural networks trained on enormous datasets of existing video content. These models learn patterns of motion, objects, and scenes, enabling them to generate entirely new sequences from simple text prompts. OpenAI’s Sora, for example, represents a significant leap in this domain, capable of producing remarkably coherent and realistic video clips. This advancement also aligns with a broader industry trend of making AI more interactive and “social,” with new applications designed to bring generative capabilities directly to users’ fingertips.

However, the energy footprint of these advanced generative AI systems is substantial. Video generation demands significantly more computational power and energy than text or even image generation, raising questions about environmental sustainability. Moreover, the ease of creation brings copyright into sharp focus. The current landscape often places the onus on copyright holders to request the removal of their intellectual property when it appears in AI-generated content, rather than instituting preventative measures, creating a reactive and often insufficient system for protection.

Navigating the Broader AI Landscape: Challenges and Innovations

The discussions around bias and video generation are just two facets of a rapidly evolving technological landscape. Wider concerns about AI’s societal impact continue to emerge. For instance, anxieties about AI’s role in job displacement might be overstated, with recent labor market studies suggesting chatbots may not be eliminating jobs at the rate many initially feared. This perspective encourages a more nuanced understanding of AI as a tool that could augment human capabilities rather than simply replace them.

Conversely, the ethical boundaries of AI are frequently tested. OpenAI’s moves to restrict topics that ChatGPT will discuss, for example, have provoked strong reactions from users demanding autonomy over their digital interactions—a sentiment encapsulated by one X user’s plea: “Please treat adults like adults.” This highlights a tension between platform governance, user freedom, and the aspiration for AI to be a helpful, yet impartial, assistant. The implications extend to sensitive areas like mental health, where therapists are reportedly using ChatGPT, raising ethical questions about client consent and the nature of therapeutic relationships.

Beyond these ethical debates, the tech world continues to push scientific and engineering boundaries. Innovations like creating embryos from human skin cells for the first time promise new avenues for addressing infertility and supporting diverse family structures. Yet, the path to commercialization for other groundbreaking technologies, such as solid-state batteries for electric vehicles, remains fraught with challenges. Even seemingly simpler endeavors, like DoorDash’s food delivery robot, encounter significant hurdles in real-world deployment. The ambition to create new platforms, such as Elon Musk’s purported Wikipedia rival, also underscores the ongoing battle for reliable, impartial information in a world increasingly shaped by AI and personalized algorithms.

Actionable Steps for a Responsible AI Future:

As AI continues its rapid ascent, conscious efforts are required from all stakeholders to steer its development and deployment towards a more equitable and beneficial future.

- For AI Developers and Organizations: Prioritize comprehensive and culturally sensitive bias auditing throughout the entire AI lifecycle. Actively seek diverse datasets that represent global populations accurately, and engage ethicists, sociologists, and community representatives from marginalized groups in the design and evaluation process to detect and mitigate entrenched prejudices like caste bias.

- For Content Consumers and the Public: Cultivate robust digital literacy skills. Develop a critical eye for AI-generated content, especially videos and news, by cross-referencing information with reputable sources, scrutinizing visuals for inconsistencies, and questioning sensational claims. Understand that not all content online is human-created or verifiably true.

- For Policymakers and Regulators: Establish clear, proactive ethical guidelines and regulatory frameworks for AI. Focus on accountability for algorithmic bias, transparency in generative AI content labeling, data privacy, and the environmental impact of large-scale AI operations. Foster international cooperation to create standards that address global challenges without stifling innovation.

Conclusion

The journey through today’s technological landscape reveals a powerful truth: AI is not merely a tool, but a reflection and a shaper of our societies. The urgent issue of caste bias within OpenAI’s models serves as a potent reminder that without deliberate ethical considerations, AI can inadvertently perpetuate and amplify existing societal inequalities. Simultaneously, the rapid evolution of AI video generation, while impressive, necessitates a critical awareness of its potential for misinformation and its environmental footprint.

The dialogue surrounding AI must extend beyond its capabilities to its responsibilities. By understanding both the wonders and the pitfalls of these technologies, we can work towards a future where AI truly benefits all of humanity, rather than deepening divides or spreading falsehoods.

Stay Informed and Engaged

The world of AI is dynamic and ever-changing. To stay abreast of critical developments, ethical debates, and the inner workings of groundbreaking technologies, make it a habit to seek out reliable sources of information. For deeper dives into topics like how AI models generate videos, consider exploring podcasts such as MIT Technology Review Narrated on Spotify or Apple Podcasts. Your engagement and informed perspective are crucial in shaping the future of AI responsibly.

Frequently Asked Questions

What is the main concern regarding OpenAI’s models like ChatGPT and Sora?

The primary concern is the significant caste bias found in OpenAI’s models, including ChatGPT and Sora, which perpetuates harmful socioeconomic and occupational stereotypes against marginalized communities like Dalits in India.

How does caste bias manifest in OpenAI’s AI models?

Despite progress in contemporary India where many Dalits have achieved success, AI models continue to reproduce outdated stereotypes, depicting Dalits as fit only for menial or unclean jobs, failing to reflect modern societal realities.

What are the key ethical challenges associated with AI video generation?

AI video generation introduces challenges such as the proliferation of “AI slop” (low-quality content), the risk of “faked news footage” that blurs truth, high energy consumption for content creation, and complex copyright issues due to the use of existing data for training.

What actionable steps can be taken to promote responsible AI development and use?

Responsible AI requires developers to conduct thorough bias auditing and use diverse datasets, consumers to enhance digital literacy for critical evaluation of AI-generated content, and policymakers to establish clear ethical guidelines, accountability, and transparency frameworks.

Is AI truly causing widespread job displacement?

Recent labor market studies suggest that anxieties about AI causing widespread job displacement might be overstated. Instead, AI may increasingly serve as a tool to augment human capabilities rather than solely replacing jobs, encouraging a more nuanced perspective on its impact on employment.